Recognising Displaced People from Images by Exploiting Dominance Level

Project description

Introduction

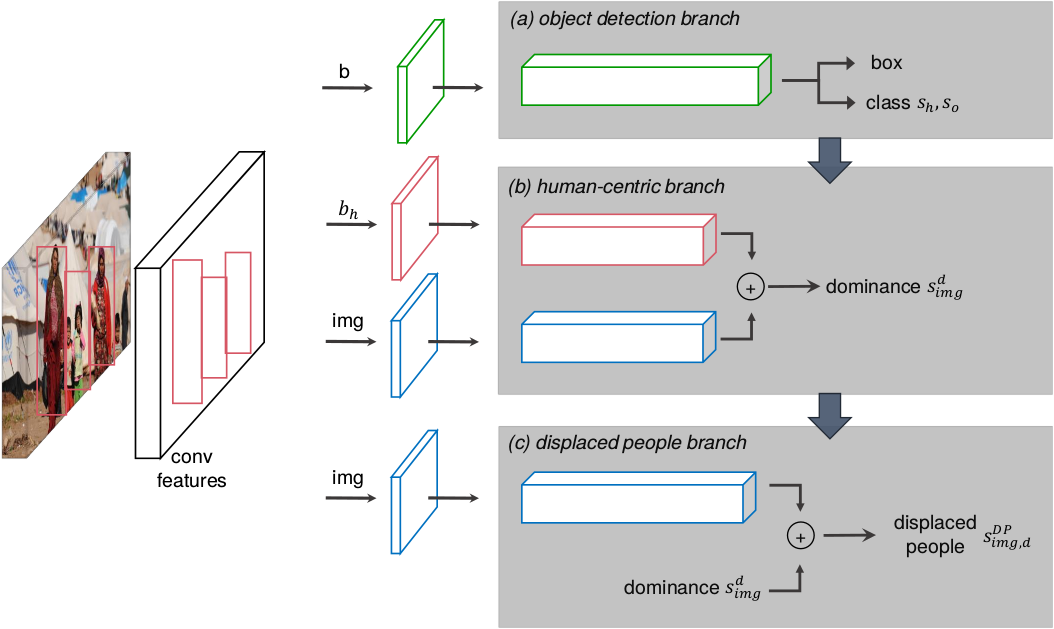

To reduce the amount of manual labour required for human-rights-related image analysis, we introduce DisplaceNet, a novel model which infers potential displaced people from images by integrating the dominance level of the situation and a CNN classifier into one framework.

Grigorios Kalliatakis Shoaib Ehsan Maria Fasli Klaus McDonald-Maier

To appear in 1st CVPR Workshop on

Computer Vision for Global Challenges (CV4GC)

[arXiv preprint]

[poster coming soon...]

Dependencies

- Python 2.7+

- Keras 2.1.5+

- TensorFlow 1.6.0+

Usage

Clone the repository:

$ git clone https://github.com/GKalliatakis/DisplaceNet.git

Inference with pretrained models

To make a single image inference using DisplaceNet, run the script below. See run_DisplaceNet.py for a list of selectable parameters.

$ python run_DisplaceNet.py --img_path test_image.jpg \

--hra_model_backend_name VGG16 \

--emotic_model_backend_name VGG16 \

--nb_of_conv_layers_to_fine_tune 1

Inference results DisplaceNet vs vanilla CNNs

Make a single image inference using DisplaceNet and display the results against vanilla CNNs (as shown in the paper). For example to reproduce image below, run the following script. See displacenet_vs_vanilla.py for a list of selectable parameters.

$ python displacenet_vs_vanilla.py --img_path test_image.jpg \

--hra_model_backend_name VGG16 \

--emotic_model_backend_name VGG16 \

--nb_of_conv_layers_to_fine_tune 1

Training DisplaceNet's branches from scratch

-

To train displaced people branch on the HRA subset, run the training script below. See train_emotic_unified.py for a list of selectable parameters.

$ python train_hra_2class_unified.py --pre_trained_model vgg16 \ --nb_of_conv_layers_to_fine_tune 1 \ --nb_of_epochs 50

-

To train human-centric branch on the EMOTIC subset, run the training script below. See train_emotic_unified.py for a list of selectable parameters.

$ python train_emotic_unified.py --body_backbone_CNN VGG16 \ --image_backbone_CNN VGG16_Places365 \ --modelCheckpoint_quantity val_loss \ --earlyStopping_quantity val_loss \ --nb_of_epochs 100 \

Please note that for training the human-centric branch yourself, the HDF5 file containing the preprocessed images and their respective annotations is required (10.4GB).

Data of DisplaceNet

Here we release the data for training DisplaceNet to the public.

Human Rights Archive is the core set of our dataset, which has been used to train DisplaceNet.

The constructed dataset contains 609 images of displaced people and the same number of non displaced people counterparts for training, as well as 100 images collected from the web for testing and validation.

Results (click on images to enlarge)

Performance of AbuseNet

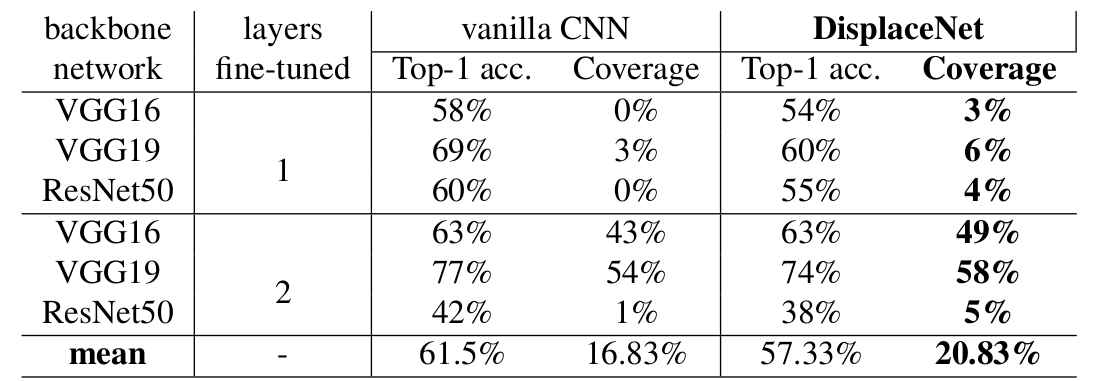

The performance of displaced people recognition using DisplaceNet is listed below. As comparison, we list the performance of various vanilla CNNs trained with various network backbones, for recognising displaced people. We report comparisons in both accuracy and coverage-the proportion of a data set for which a classifier is able to produce a prediction- metrics

Citing DisplaceNet

If you use our code in your research or wish to refer to the baseline results, please use the following BibTeX entry:

@article{kalliatakis2019displacenet,

title={DisplaceNet: Recognising Displaced People from Images by Exploiting Dominance Level},

author={Kalliatakis, Grigorios and Ehsan, Shoaib and Fasli, Maria and McDonald-Maier, Klaus D},

journal={arXiv preprint arXiv:1905.02025},

year={2019}

}

:octocat:

Repo will be updated with more details soon!

Make sure you have starred and forked this repository before moving on!

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for DisplaceNet-0.1-py2-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 2a547c9d35604c3d27dd60788344c8415505e206a9cf33e042dec0dbf986ce3e |

|

| MD5 | bbdc6a199065a0f3f4e12e310f9c8656 |

|

| BLAKE2b-256 | 6993e040ab9cb7442b57c356d6c1861b3f442e645af017a40201dff20edef69a |